Concepts of Design Assurance for Neural Networks (CoDANN)

Building Trust in AI for Flight Systems

For decades, aviation software has been built on the principle of deterministic logic. Give the system input A, and it will always produce output B. This predictability is the bedrock of aviation safety certification, where every possible path can be tested, verified, and traced back to a requirement. Artificial intelligence breaks that contract. Modern neural networks have shown remarkable skill in vision and perception tasks relevant to flight, from obstacle detection to runway tracking, but their performance gains come at a cost, “complexity“. As these models grow deeper and more data-driven, their inner workings become harder to verify, explain, or even fully reproduce.

This creates what regulators now call the “assurance gap.” How can agencies like the Federal Aviation Administration (FAA) or the European Union Aviation Safety Agency (EASA) certify a system whose decision-making can’t be perfectly predicted or explained?

This is the exact problem that the Concepts of Design Assurance for Neural Networks (CoDANN) framework, a foundational project by (EASA) and Daedalean were designed to solve. Instead of trying to verify the final, opaque neural network, the framework’s breakthrough was to shift the focus to certifying the entire process that created it. This introduced a new methodology called Learning Assurance.

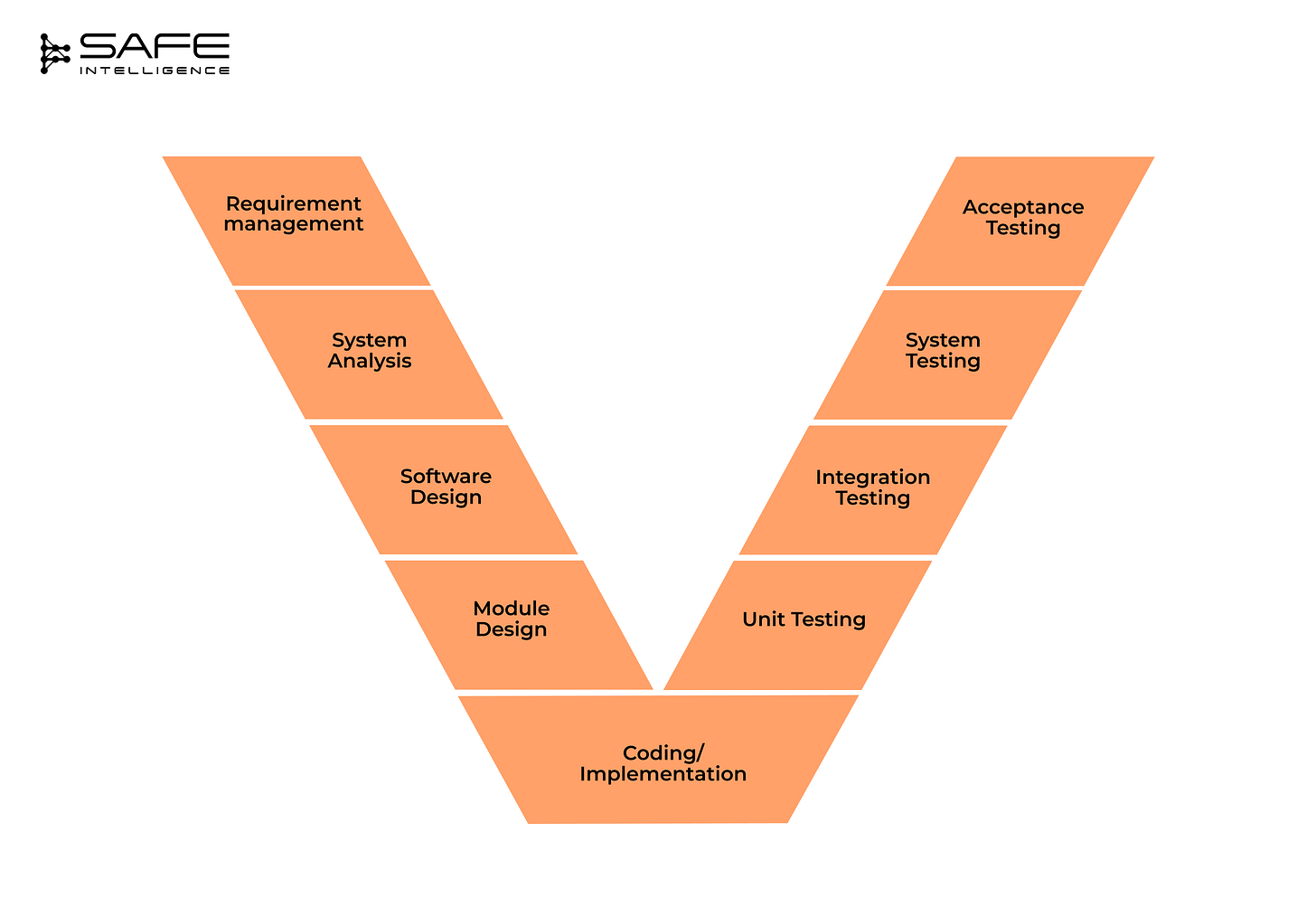

To appreciate this shift, we first need to look at the established standard for aviation software: the traditional V-shaped development cycle.

This model is the perfect picture of deterministic engineering that moves downwards through requirements, design, and implementation and then climbs back up through testing and validation. This framework gives aviation software provable traceability where every requirement is explicitly validated by a corresponding test, and the respective implementation traces back to design decisions.

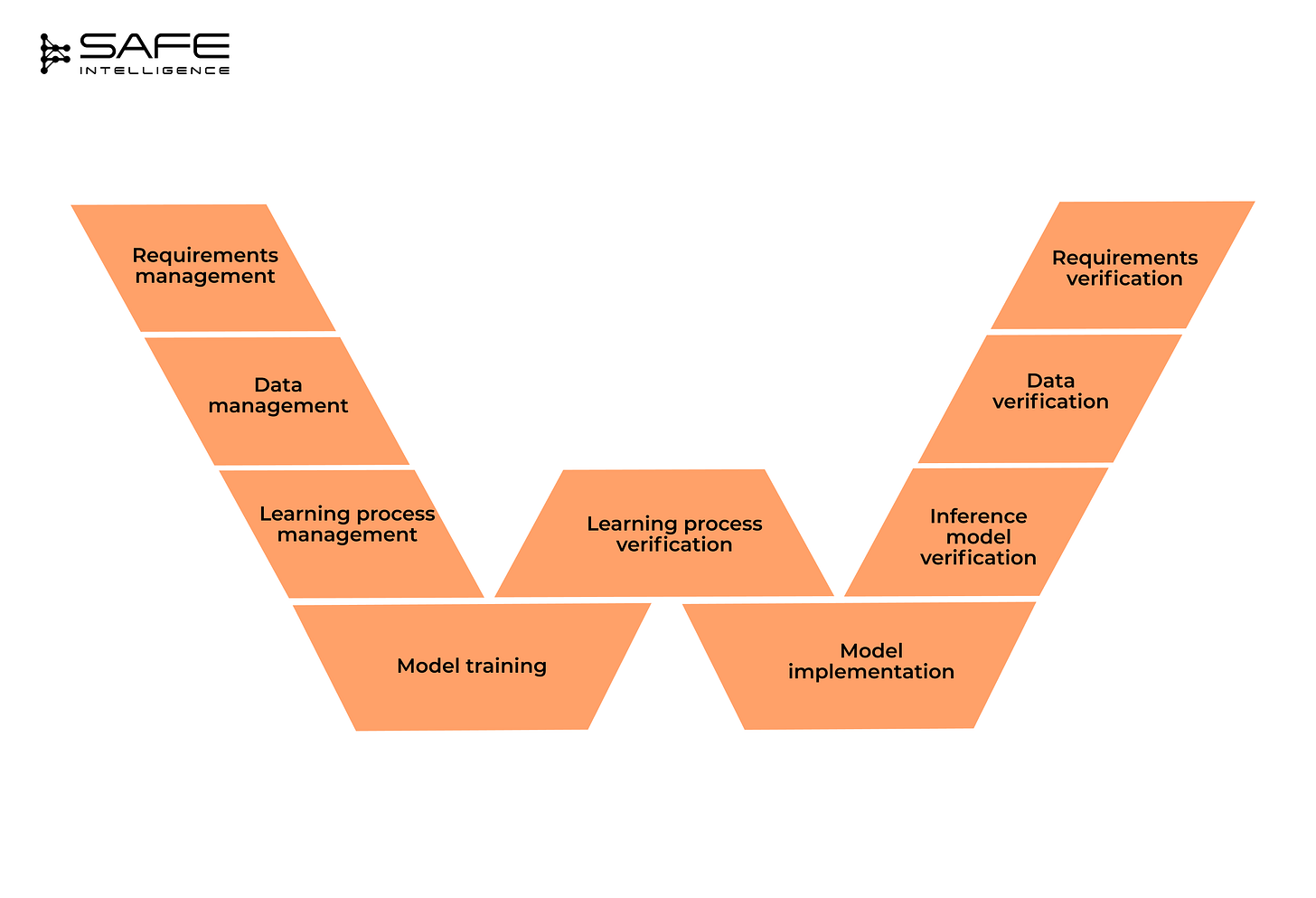

“The V-model’s logic breaks down where the ‘Implementation’ phase is no longer a human writing explicit, verifiable rules, but a learning system (neural network) that learns implicit patterns from data, creating a system whose logic is not fixed and can evolve as the data itself shifts over time. This phase now consists of the dataset and the training algorithm, two elements that the V-model was never designed to handle. To handle that, CoDANN didn’t discard the V model but rather expanded it into a W model.

Here’s what each stage means in the W-model:

Evolution of CoDANN

The CoDANN (2020) and its W-model provided the foundational strategy (certify the process, not just the product). Its 2021 successor, CoDANN II, immediately extended this framework to practical deployment, tackling the challenges of implementation and inference assurance (like quantisation, hardware mapping, and timing analysis) while also formally adding Explainability, system integration, and robust runtime (Out-of-Distribution) monitoring as core pillars of a trustworthy AI.

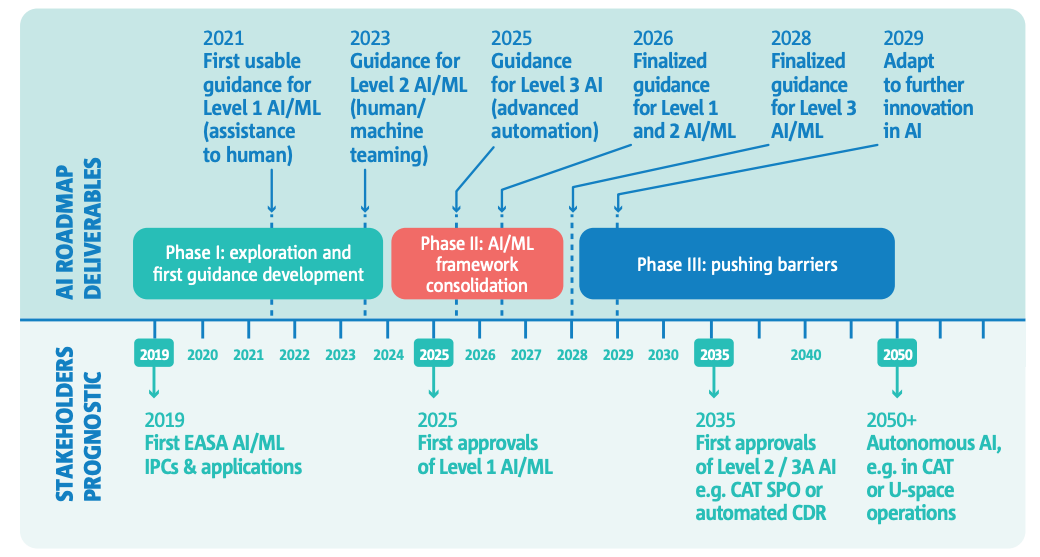

Europe’s Path via the EASA formally adopted these concepts in its AI Roadmap 2.0 (2023), making Learning Assurance a core pillar of its official strategy. The goal is pragmatic by using existing safety frameworks, but add the new ML-specific processes and evidence required to scale from simple assistance to higher autonomy.

The US Path via the FAA Roadmap for AI Safety Assurance (2024) followed suit. It sets out the principles for adopting AI within the existing certification scaffolding, emphasising incremental approvals, human-in-the-loop operation, and lifecycle assurance that covers both design-time and operation-time.

While each regulator has its own roadmap, their destination is the same. Both agree that there can be no widespread adoption of AI without a new, rigorous body of scientific proof or quantified assurance evidence

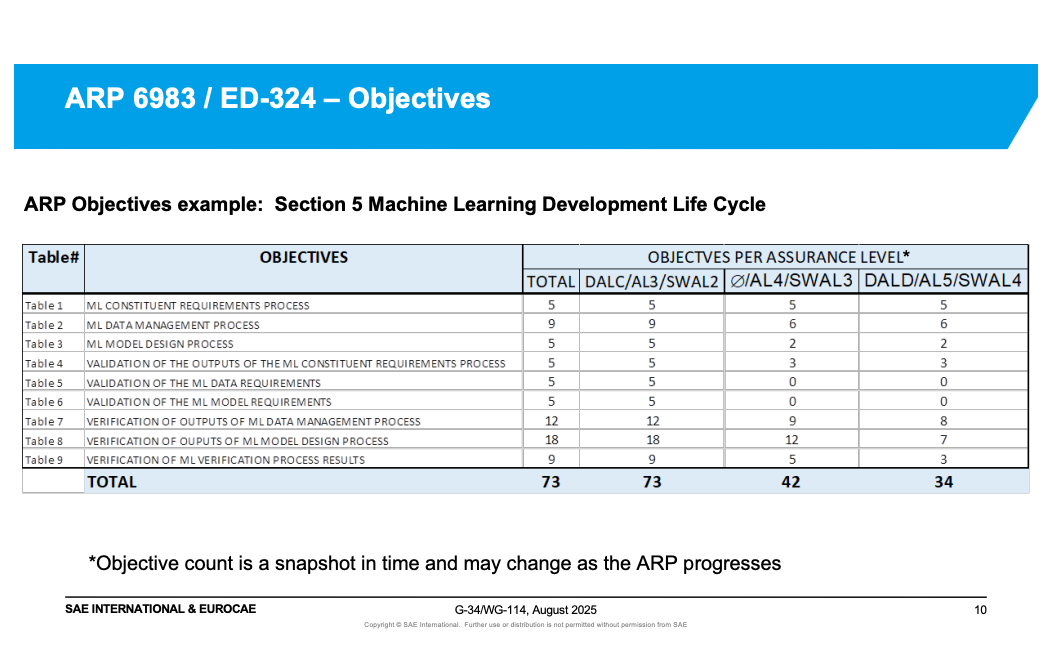

To bridge national frameworks, SAE G-34 and EUROCAE WG-114 are developing the first globally harmonised AI assurance rulebook: AS 6983 / ED 324 (Development and Assurance guidelines for Aeronautical Systems and Equipment Implemented with Machine Learning). The forthcoming standard transforms the W-model into an actionable compliance checklist, specifying how to produce certifiable evidence for data management, training, model verification, configuration control, and runtime assurance.

To illustrate what that looks like in practice, the draft ARP 6983 / ED-324 defines explicit objectives for every phase of the Machine Learning Development Lifecycle (MLDL). Each assurance level has its own set of mandatory objectives and distinct assurance checkpoints.

Beyond the Checklist

The AS 6983 standard provides the compliance checklist, but how do engineers actually prove a complex, probabilistic neural network is safe? This is achieved through a multi-layered defence:

Formal Verification: This is where the strategy shifts from exhaustive testing to mathematical proof that the model satisfies specific safety rules. It’s impossible to test for every single thing the AI might see, such as every possible weather condition, every type of runway, and every time of day. Instead of just trying to find failures, engineers now prove their absence and find counterexample, read more about formal verification here →.

Overarching Properties (OPs): A concept pioneered by NASA, OPs take a pragmatic approach. Rather than proving everything about the AI, you prove a small set of unbreakable safety boundaries. For example, the aircraft will always maintain a safe distance from another aircraft. These rules provide a rock-solid, provable safety guarantee, no matter what the complex AI model thinks it’s seeing.

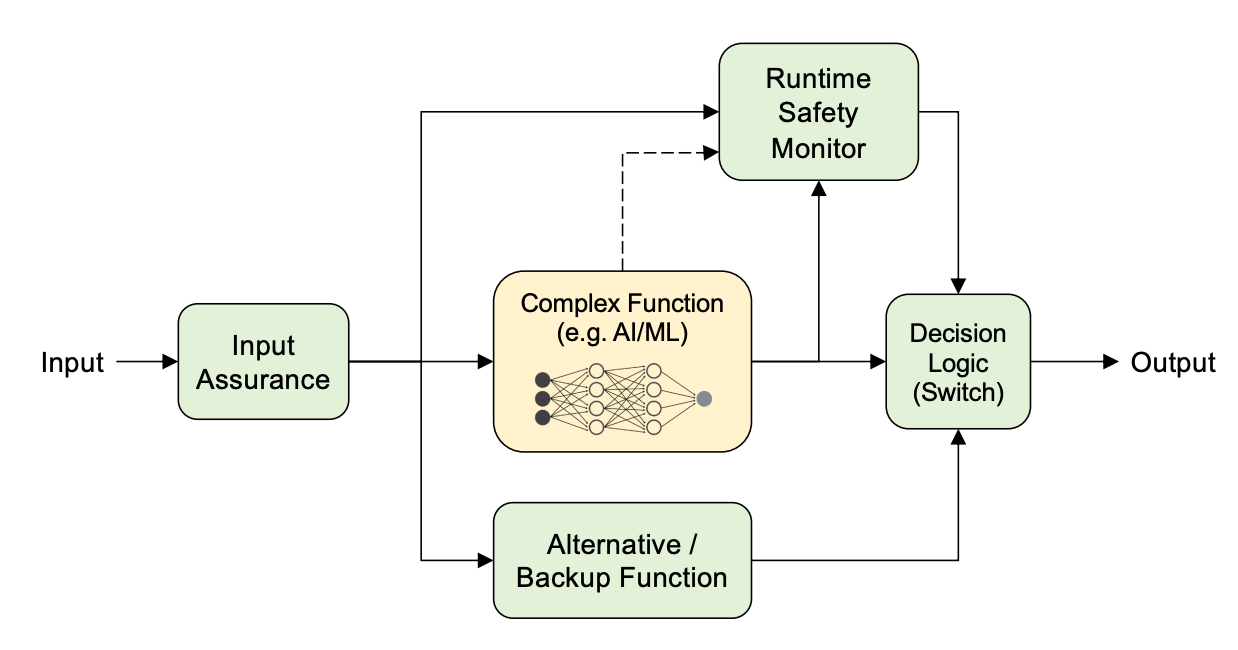

Runtime Assurance (RTA): This is the system’s active safety net that operates during the flight like a co-pilot that never improvises. It assumes that despite the best design, the AI might still encounter a bizarre edge case. A simple, verified controller (the Checker) runs in parallel with the complex AI (the Doer). The AI can make high-level decisions and every command it sends is screened by the Checker. If a command ever looks unsafe or uncertain, say the AI suggests a turn that’s too sharp or a descent that exceeds its limits, the Checker instantly blocks it and replaces it with a safe, pre-approved manoeuvre. This design means that even if the AI encounters something it was never trained on, the aircraft always stays inside a mathematically defined safety envelope.

These proven properties and assurance methods are then all assembled into a formal safety case (a structured, auditable argument), supported by evidence, that the entire system is acceptably safe.

All these initiatives are converging toward a single global vision that trust built through transparency, process, and proof. Certification is no longer a one-time hurdle but a continuous assurance loop, tracing safety from data to decision to deployment.

Stay safe 💚 and here’s to intelligence that can safely evolve in the sky.